Transforming Predictive Policing

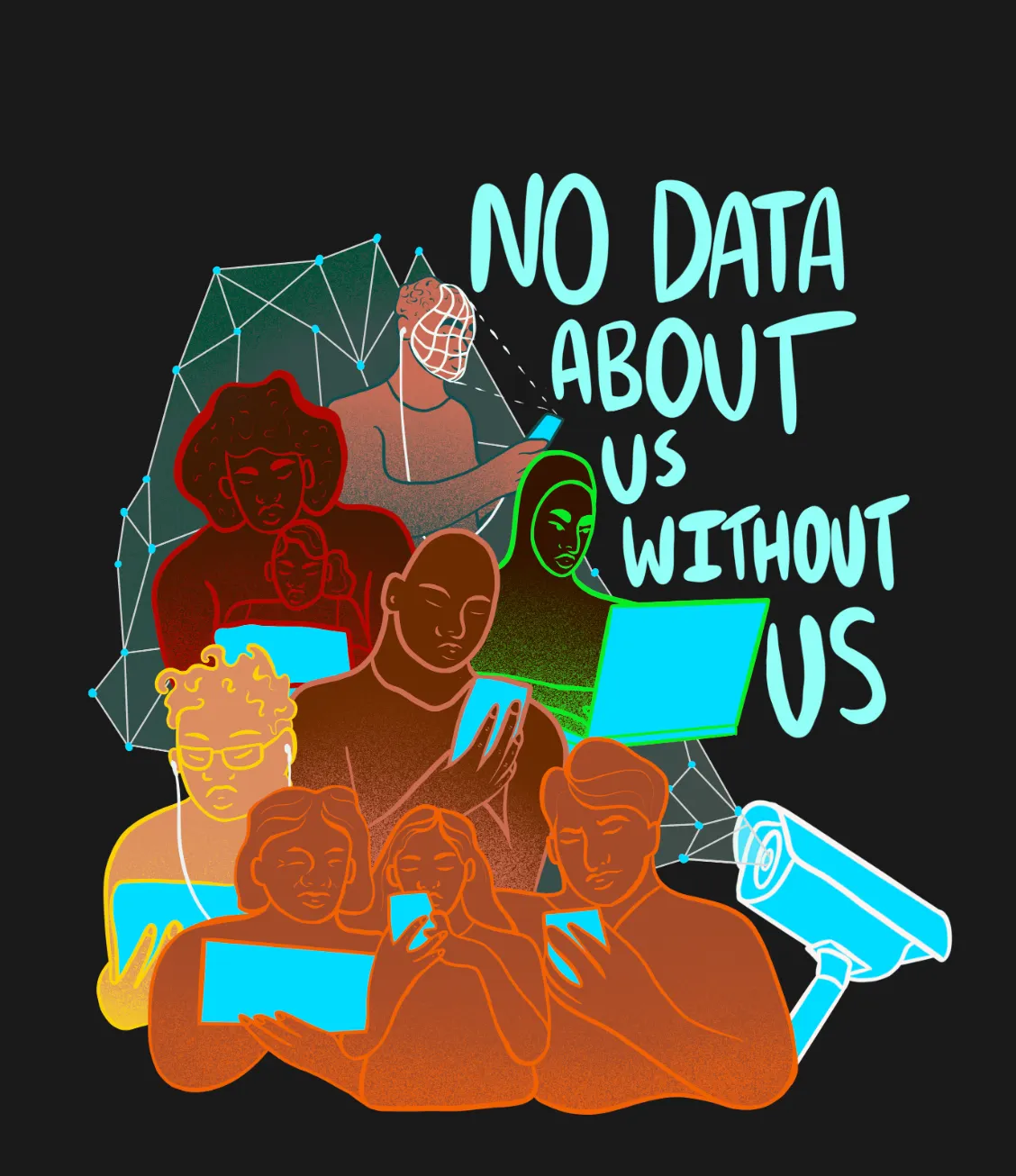

Using online social networks to identify suspects and potential criminals is part of a push within law enforcement towards big data surveillance and predictive policing analytics. However, critics warn that the use of predictive policing can enhance racial discrimination in the criminal justice system.

In response to the brutal murder of George Floyd by Minneapolis police in May 2020, Black Lives Matter protests erupted across the country. Thanks to social media, protesters took to the streets, demanding an end to police brutality in one of the most publicized and organized demonstrations for racial justice in American history. Social networks and video technology were catalysts for the BLM protests, but police departments have used these same technologies to create dangerous new opportunities for surveillance. Allie Funk, a Senior Research Analyst for Technology and Democracy, explained how police departments actually used social networks to surveil Black Lives Matter protesters and organizers. In Cookville, Tennessee, FBI Agents from the Joint Terrorism Task Force (JTTF) used social media to identify several organizers of BLM protests. The federal agents showed up unannounced at the organizers’ homes and places of work to question them about their Facebook posts and protesting plans.

Using online social networks to identify suspects and potential criminals is part of a push within law enforcement towards big data surveillance and predictive policing analytics. The Brennan Center for Justice at NYU Law explains predictive policing as “using algorithms to analyze massive amounts of information in order to predict and help prevent future crimes.” In the aftermath of 9/11, information sharing became a priority. The Homeland Security Act of 2002 made federal dollars flow towards developing local and state predictive policing measures to prevent another large-scale terror attack. Hubs for surveillance, called ‘Fusion Centers,’ were created to promote open communication between agencies and included public and private data, police records, pictures, and videos, from law enforcement and private citizens. Surveillance in the name of crime prevention only heightened as predictive analytics developed, and law enforcement agencies began partnering with the private sector to collect, store, and analyze data. Police ultimately rely on private-sector surveillance tools because they have less red tape; there are fewer legal checks and reporting requirements on privately collected data. [1]

Law enforcement agencies across the United States employ a plethora of private programs and algorithmic tools in the hopes of using predictive policing to stop crime before it’s committed. Standard algorithmic surveillance tools used to scrape data, both privately and from law enforcement directly, are Automatic License Plate Readers (ALPRs), social media surveillance software, facial recognition technology, and social network analysis. In all of these tools, information is taken from the source and used to map social relationships, identify suspects, and predict future behavior. [2] One success story lies in the city of New Orleans’s NOLA for Life Program, which used predictive programs like Palantir, a private data analytics company that collects, stores, and analyzes data to approach crime from a public health perspective. The city mapped everything from likely victims of crime to at-risk perpetrators to gang meeting locations and intervened with 29 different social services programs. New Orleans experienced a 55% reduction in group and gang-involved murders between 2011-2014, and the use of data to inform crime reduction strategies was a crucial factor. [3] Even so, critically, the public was not made aware of the use of Palantir technologies in the city, and, often, these technologies are used to direct punitive measures rather than as part of a holistic strategy. The use of private surveillance programs also produces an immense opportunity for abuse and privacy concerns. Additionally, critics warn that the use of predictive policing can actually enhance racial discrimination in the criminal justice system.

Often, proponents of predictive policing strategies point to the impartial nature of data- claiming that its use can reduce racial bias in the criminal justice system because ‘numbers are neutral.’ At first glance, this appears to be true: evidence illustrates that crime clusters in locations and among people. For example, in Boston, 70% of shootings over a three-day period were concentrated in 5% of the city, and data illustrates that the perpetrators of violent crime are most likely to be male, young, and have a juvenile criminal record. [4] With this knowledge in mind, inputting information about where and by whom past crimes have been committed should be a practical and objective assessment in predicting future crimes. We cannot forget, however, that algorithmic structures are created within existing power hierarchies and institutions.

Sarah Brayne, in her book, Predict and Surveil: Data Discretion, and the Future of Policing, explains the social side of data by writing that “databases are populated by information collected as a result of human decisions, analyzed by algorithms created by human programmers, and implemented and deployed on the street by human officers following supervisors’ orders and their own intuition,” (Brayne 135). Data is not created in a vacuum— it’s molded by the people who shape its collection, deployment, and governance. That being said, human biases creep into the algorithmic structures used in policing. The LAPD’s Operation LASER program is one example of a predictive policing tool that reinforced existing racial discrimination in the criminal justice system while being shrouded by data’s impartial appearance. The program was rolled out in 2011 to target individuals most likely to commit a crime- calculated by criminal history, field interview cards, traffic citations, daily patrols, and ‘quality’ interactions with the police, etc. Each individual was given a ‘risk’ score and potentially placed on a Chronic Offender List, which was sent to police departments. Critics of the program voiced concern about the potential for feedback loops: if an individual interacts with the police, their score will go up, effectively justifying more interaction with that individual, and the cycle repeats. Additionally, although the data seems unbiased, all numbers input into the program come from past records, meaning that earlier police bias could still trickle into the data sets. After pressure mounted from the STOP LAPD Spying Coalition, the LAPD Inspector General conducted an internal audit on the LASER Program, finding irregularities in the “chronic offender” system. The audit exposed that almost half of those designated as “chronic offenders” had zero or only one arrest and that interactions with “chronic offenders” lacked consistency. In 2019, the LAPD shut down LASER in light of the audit information.

Predictive tools exacerbate inequalities by using data from locations that have been traditionally overpoliced. Biased sample sets are baked into algorithms that perpetuate racially charged policing, and these tools are being used as a way to “tech-wash” the pervasive problem of racial bias in police departments. These problems need to be solved not just with data but also with personal intervention, training, and restructuring our police departments. Even so, there’s no denying that data does have the potential to reduce crime. When collected equally, it can efficiently utilize police resources to identify perpetrators of crime and map trends in criminal activity. So how can we maximize the benefits of predictive programs while minimizing their discriminatory features?

Sarah Brayne offers comprehensive strategies to craft change in how police departments use predictive programs, including using data to ‘police the police.’ Brayne writes that “If the surveillant gaze is inverted, data can be used to fill holes in police activity, shed light on existing problems in police practices, monitor police performance and hit rates, and even estimate and potentially reduce bias in police stops,” (Brayne 143). Data can be leveraged to identify and trace errors and trends in policing, as well as to measure the costs of specific tactics. That being said, legal structures covering police use of data, specifically privately collected data, need to be more robust and focus on transparency. In its best form, I believe that we can use data to make policing both more efficient and equitable.

Furthermore, Brayne emphasizes the need for law enforcement agencies to slow down before implementing algorithmic assessment tools, and I agree with her statement that “the onus should fall on law enforcement to justify the use of big data and new surveillance tools prior to mass deployment,” (Brayne 142). Brayne notes that the only peer-reviewed study of LASER was conducted by someone from the company that designed it. The use of mass predictive analytics tools should be preceded by evaluation from independent agencies, community forums, and collaboration between interdisciplinary agents, including lawyers and community experts, as well as cops and program architects. That being said, the technologies themselves have a responsibility to deploy programs in the public interest. One strategy involves adding a degree of randomness to predictive programs- sending officers to locations with varying risk levels to reduce bias in the data sets. Predictive policing tool HunchLab does this and more; the program’s database solely includes publicly recorded crimes, leaving out petty criminal activity that correlates with poverty and involves higher officer discretion and potential bias at locations.

Ultimately, these strategies will help reduce biases in algorithmic structures. Still, we can’t reform the current predictive system without first asking ourselves what we see as the goal of successful policing. Is it to reduce crime rates? Is it to keep people safe? Successful policing extends beyond the traditional limits of enforcing the law; it includes addressing the root causes of crime, like poverty and education, and recognizing when police intervention is legitimately necessary. For example, reforming policing means restructuring the emergency responses to mental health crises by diminishing police intervention and instead utilizing health professionals. Predictive programs do have capabilities for improving our society and keeping our communities safe. Still, for those goals to be realized, analytics need to be used to design social solutions and, importantly, to build targeted interventions for those the data deems ‘risky.’ At the end of the day, community engagement, transparency, and collaboration between sociologists, community members, lawyers, and program designers will be vital in transforming predictive policing to work for all.

References

[1] Predict and Surveil: Data, Discretion, and the Future of Policing, by Sarah Brayne, Oxford University Press, 2021.

[2] Miles Kenyon, “Algorithmic Policing in Canada Explained,” The Citizen Lab, last modified September 1, 2020, https://citizenlab.ca/2020/09/algorithmic-policing-in-canada-explained/.

[3] “Whom We Police: Person-Based Predictive Targeting .” The Rise of Big Data Policing: Surveillance, Race, and the Future of Law Enforcement, by Andrew G. Ferguson, New York University Press, 2020, pp. 49–51, 55.

[4] “Sticky Situations.” Bleeding out: the Devastating Consequences of Urban Violence--and a Bold New Plan for Peace in the Streets, by Thomas Abt, Basic Books, 2019, pp. 35–46.